ASRock Rack 6U8X-EGS2 H200 Rear Hardware Overview

For the rear, there is a lot going on here. We thought we would go through the rear building it as we go along.

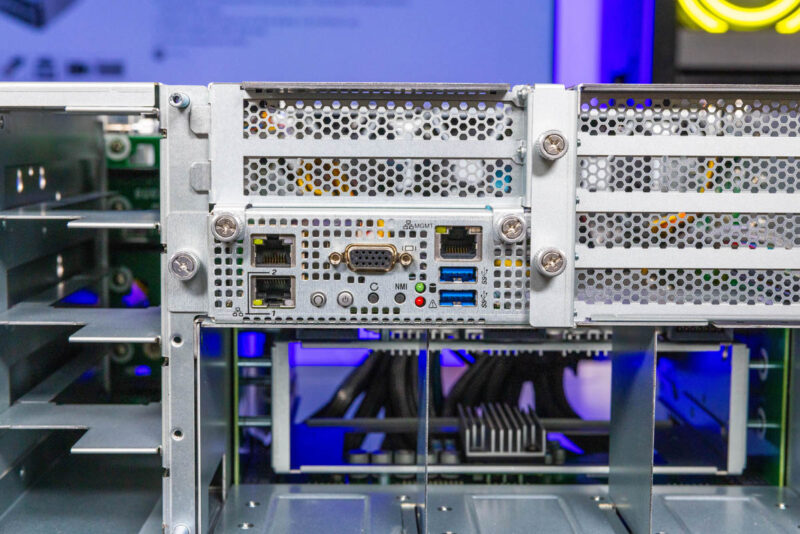

Here is the rear I/O block. There are a few neat bits. First, there are only two USB 3 ports in the rear, and four in front. We still get the power and reset buttons, and status LEDs, along with our two Intel i350 1GbE ports and out-of-band management port. We are not going to go into the management on this review, other than to say this uses industry standard IPMI management based on an ASPEED AST2600 chipset with HTML5 iKVM, montioring, and so forth. Perhaps the big takeaway is that this system is one of the few we see with more USB ports on the front than the rear.

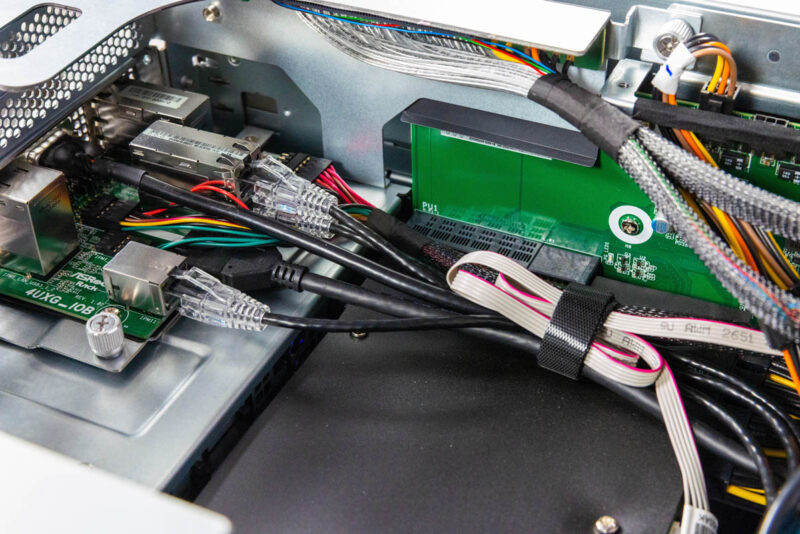

Getting the 1GbE ports and management ports to the rear includes a path through the chassis using thin 1GbE cables. Those cables plug into the back of the ASRock Rack 4UXG_IOB and take the three front ports and help expose them to the rear of the chassis. Instead of needing front and rear I/O, you can pick and choose.

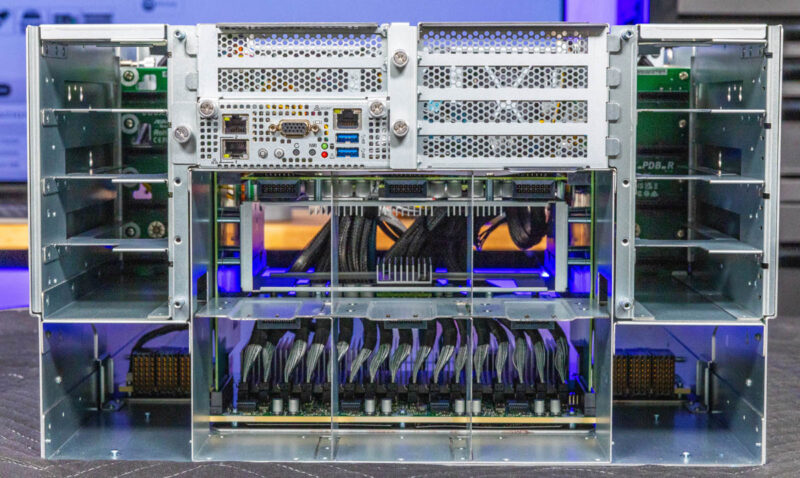

We have already shown the riser slots for the North-South network cards (usually BlueField-3 DPUs) so it is now time to get into the rest of the rear of the chassis.

First, at the bottom, here is the massive set of MCIO cables that go into the PCIe switch board. This shot alone has a line of sixteen MCIO cables connected for 128 lanes of PCIe Gen5.

ASRock has quite a few structures built around these inside the chassis for power delivery, fan control and more.

Since this area is behind the NVIDIA HGX H200 8 GPU baseboard, these components oftent need heatsinks to stay cool.

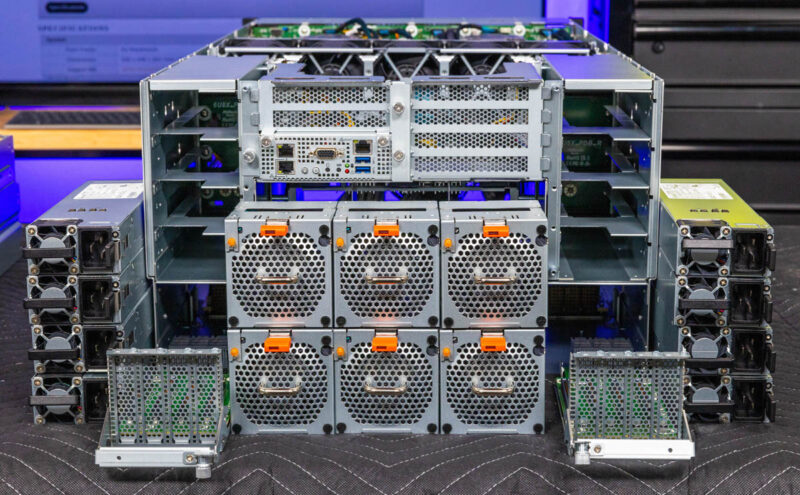

Aside from the front fans on this bottom 4U area, there are six rear fan modules as well.

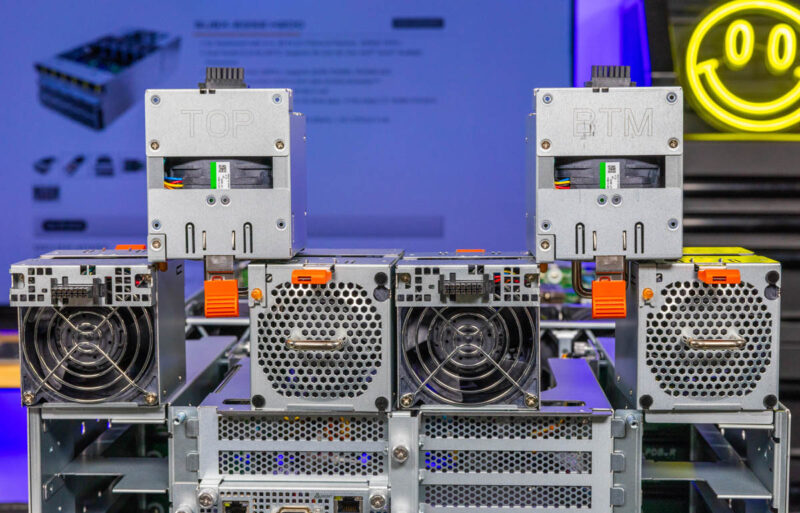

ASRock Rack’s solution for ensuring the correct fans are used in the correct orientation is to have TOP on three of them.

The other three fans are the “BTM” for bottom fans.

These fans plug into the hot-swap connectors.

Six fans later, and we have the center part of the rear put together.

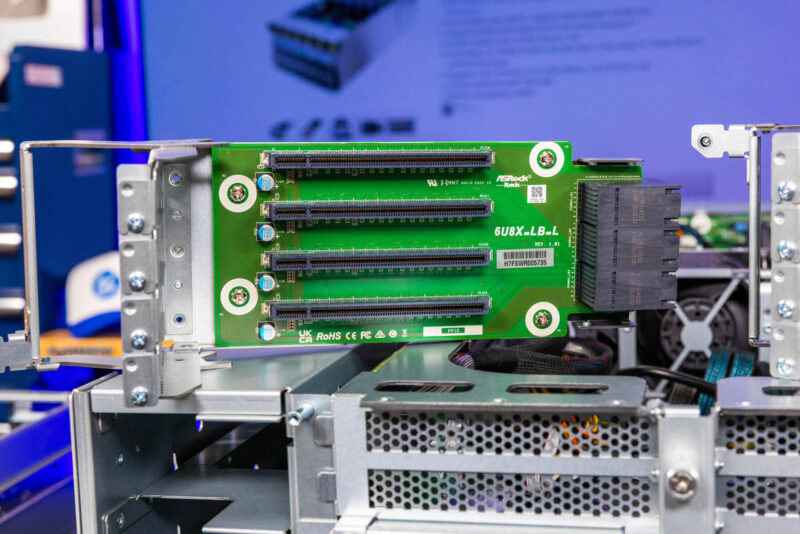

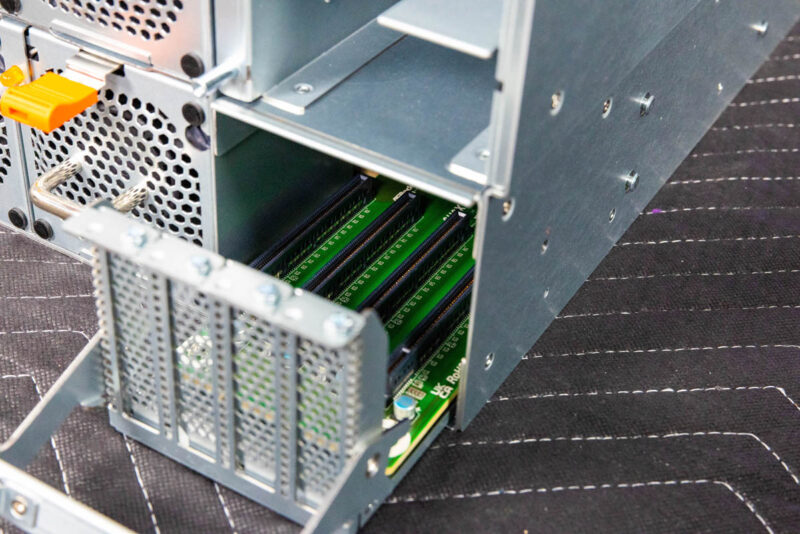

Next, some may wonder what those big connectors are for on either side of the PCIe switch board.

Those are for the two PCIe NIC trays.

Each tray has four PCIe Gen5 x16 low profile slots for a total of eight. Eight is so we can have one per GPU.

We used NVIDIA ConnectX-7’s for these which can come in Ethernet or InfiniBand flavors. Each GPU therefore gets 400Gbps of external dedicated connectivity.

The trays slide out easily with a lever making them easy to service.

Here are the two NIC trays installed, leaving only the power supplies left to install.

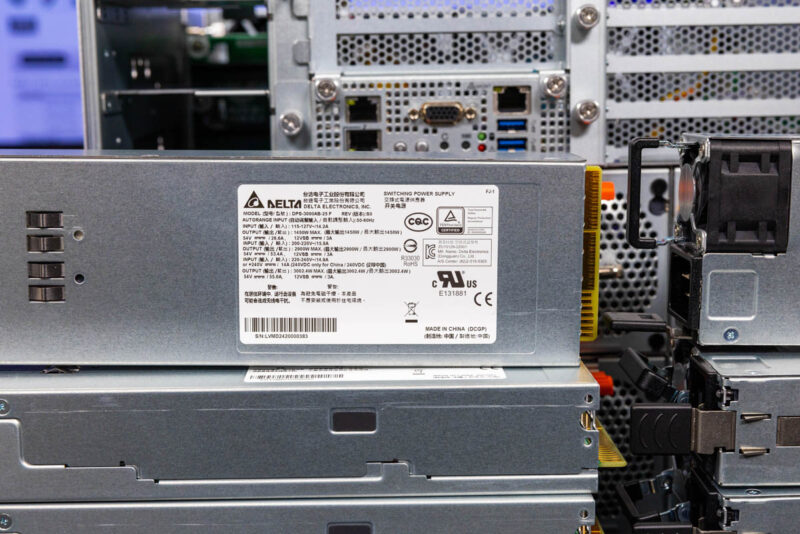

For the system, we get eight 3kW 80Plus Titanium level power supplies made by Delta. ASRock Rack is doing full 4+4 redundancy here. Companies like Supermicro have six PSUs in its air cooled systems as standard, but offer two more to get to this level of redundancy. It is cool to see ASRock Rack offering the higher spec here as standard.

Each of the 3kW PSUs is also interesting since it supplies both 12V power to the main server parts while also supplying 54V power to the NVIDIA HGX H200 8 GPU board. Some vendors use two different types of power supplies to achieve supplying two different voltages.

Four PSUs are installed on each side. Since we have 12V and 54V PSUs, we do not need to worry as much about which PSU is installed into which slot.

With those eight power supplies installed, or 24kW of power supplies, we now have all of the components installed in the rear of the system.

Next, let us get to the block diagram and performance.

STH team is doing such in-depth AI server reviews. I’d like to say thanks for this content.

The allowance for either front or rear access to the management NICs is a cute touch.

I’d be curious just how much cheaper low port count GbE switching would have to get to make reducing the internal connector count and just having both front and rear jacks live at the same time the preferred option. (or whether the unmanaged ones are already cheap enough; but customer requirements around BMC security and VLANs and such would require something nice enough that nobody bothers to make low port count versions of it that would be stupidly expensive in context.)

Great review