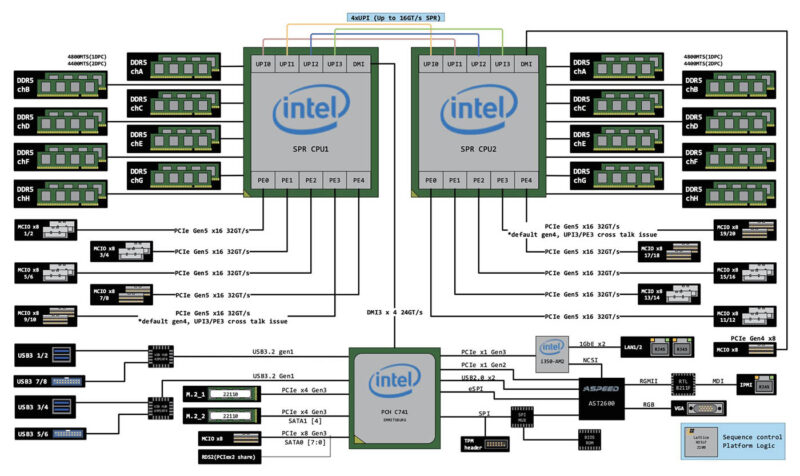

ASRock Rack 6U8X-EGS2 H200 Block Diagram

We are testing the server early in its cycle, so we do not have the server block diagram, but we managed to find the ASRock Rack SP2C741D32G-2L+ motherboard’s block diagram.

Something you will quickly notice is that all of the non-MCIO connector I/O hangs off of the Intel C741 PCH. This design leads to that massive row of MCIO connectors at the rear of the motherboard that is then used to connect to various components. There is even one of those connectors connected to the PCH. It may not seem like a big deal, but in previous versions of 8x GPU systems we would see an array of PCIe slots and high-density connectors.

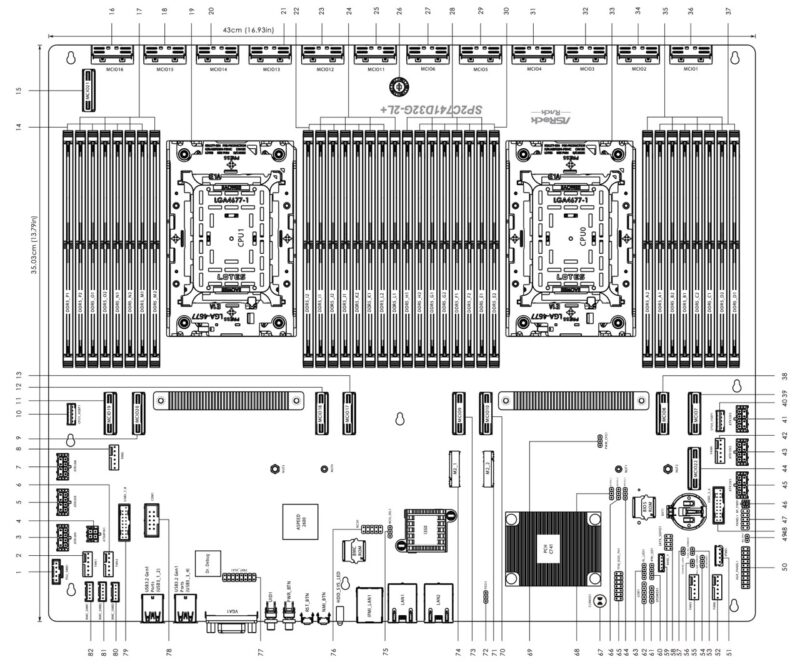

ASRock Rack SP2C741D32G-2L Plus LayoutThis is a very modern motherboard design and one that really is highly customized for this system. It would be strange to see a motherboard with the entire trailing edge made up of MCIO connectors in a standard 1U or 2U server. There are a total of 20x PCIe Gen5 MCIO connectors, along with 1x PCIe Gen4 and 1x PCIe Gen3 MCIO connector. That helps to explain why we have so many cables.

These AI servers use so much specialized PCIe connectivity that this deisgn makes a lot of sense. Many large server vendors, even Dell, effectively use their 1U/2U standard motherboard for their GPU systems. ASRock Rack is customizing the motherboard for the application. That makes a lot of sense since when a company is investing in systems that cost hundereds of thousands of dollars, optimizing the motherboard that ties the system together is worthwhile.

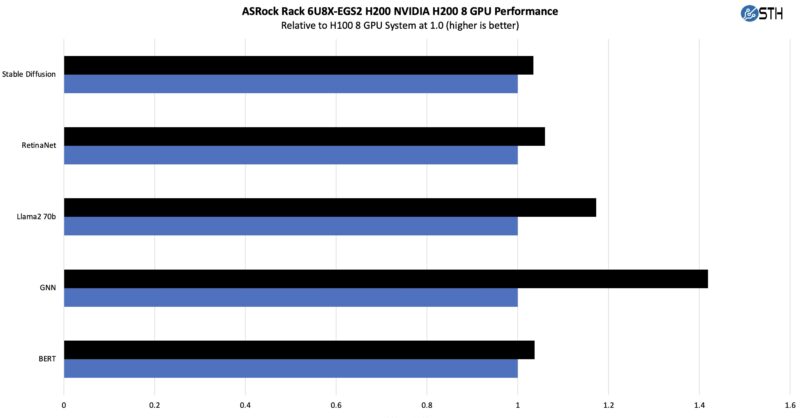

ASRock Rack 6U8X-EGS2 H200 Performance

Over the years, we have tested many AI servers. There are two major categories where the servers can gain or lose performance: cooling and power. The cooling side concerns whether the CPUs, GPUs, NICs, memory, and drives can all run at their full performance levels. The power side concerns whether we often get different power levels on the NVIDIA GPUs, sometimes due to air or liquid cooling choices. We are running at the official 700W GPU spec here.

ASRock Rack 6U8X-EGS2 H200 GPU Performance

On the GPU side, NVIDIA has made it very easy to get consistent results across vendors. We were able to jump on a cloud bare metal H100 server and re-run a few tests.

NVIDIA claims the H200 offers up to 40-50% better performance than the H100. This is true when you need more memory bandwidth and capacity. Here is a decent range of tests and results. Of course, we did not run the H200s at 1000W, which would have had a bigger impact on the results.

This is similar to the other HGX H200 servers we have been testing this quarter.

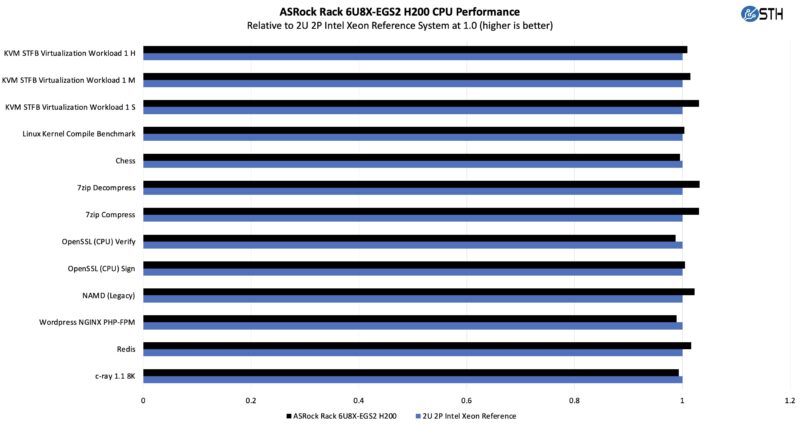

ASRock Rack 6U8X-EGS2 H200 CPU Performance

We ran through our quick test script and compared the Xeon side to our reference 2U platform.

This is more like a normal server variation, which makes sense given that the top of the server is essentially a 2U server. From what we see here, we are very close.

Small differences here are typical to see in server-to-server comparisons. “Back in the old days” in 2016-2019 it was more common to see swings in GPU servers of 10% or more. Now, we expect GPU servers to track standard CPU servers in terms of CPU performance.

Next, let us talk power consumption.

STH team is doing such in-depth AI server reviews. I’d like to say thanks for this content.

The allowance for either front or rear access to the management NICs is a cute touch.

I’d be curious just how much cheaper low port count GbE switching would have to get to make reducing the internal connector count and just having both front and rear jacks live at the same time the preferred option. (or whether the unmanaged ones are already cheap enough; but customer requirements around BMC security and VLANs and such would require something nice enough that nobody bothers to make low port count versions of it that would be stupidly expensive in context.)

Great review