Last week, I was in Taipei and took apart a lot of servers. You might have seen, for example, the fun AMD Instinct MI325X UBB feature. It was unique timing because I made a day trip out to Bring a Camera Into a Top Classified US Supercomputer, El Capitan then about 36 hours after returning home went back to the airport to Taiwan. While I was abroad, I had the chance to see many servers, but one that perhaps stood out to me was the Gigabyte G383-R80-AAP1. This is a 4-way AMD Instinct MI300A server that is air-cooled with massive heatsinks.

This is the Massive AMD Instinct MI300A Heatsink in the Gigabyte G383-R80-AAP1

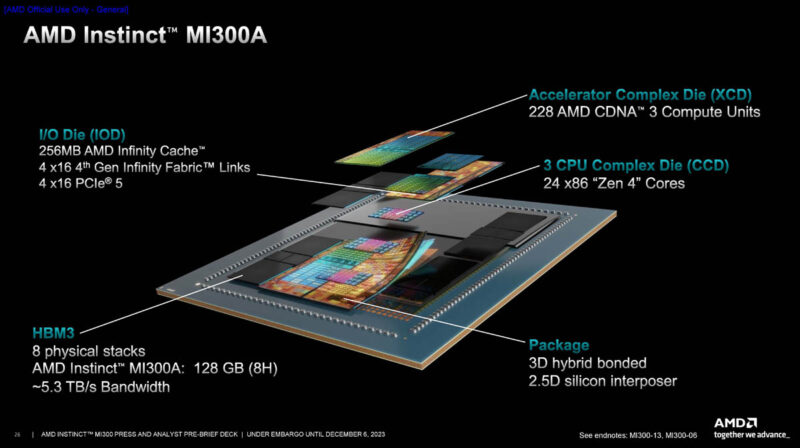

First, it is probably worth a quick refresher for many on what the AMD Instinct MI300A is. Take 24x Zen 4 cores, an I/O dies with Infinity Cache, and combine that with a 228 AMD CDNA 3 compute unit GPU engine, and 128GB of HBM3 memory all onto a single package, and you get the MI300A.

The benefit of this APU arrangement is that instead of having to move data from a CPU’s DIMMs to a GPU’s onboard HBM3 memory, instead it is all one memory pool and can be operated on by the CPU cores or the GPU cores.

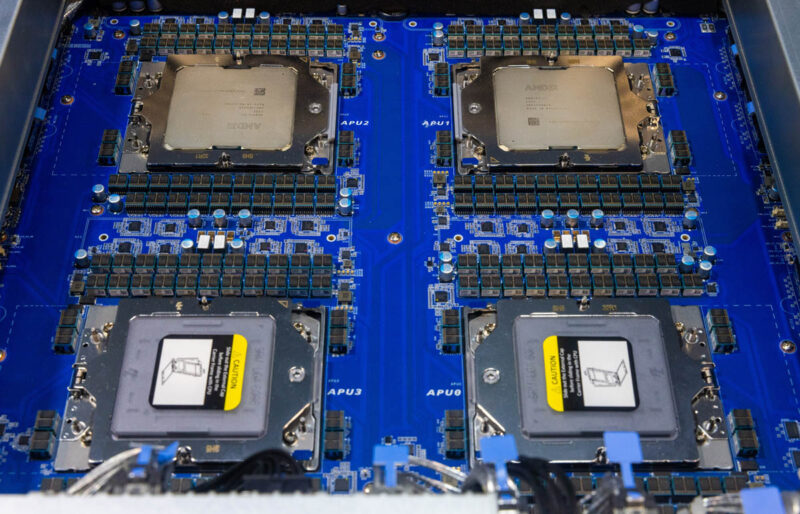

As fantastic as that sounds, it looks quite a bit like an AMD EPYC when it is packaged.

It even gets a carrier to help installation, just like an AMD EPYC does.

At SC23, we first saw the Gigabyte platform with two of these APUs installed.

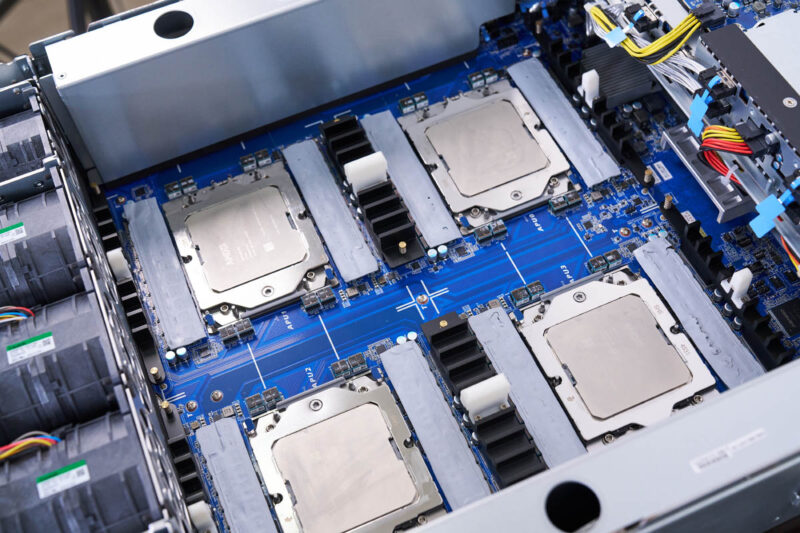

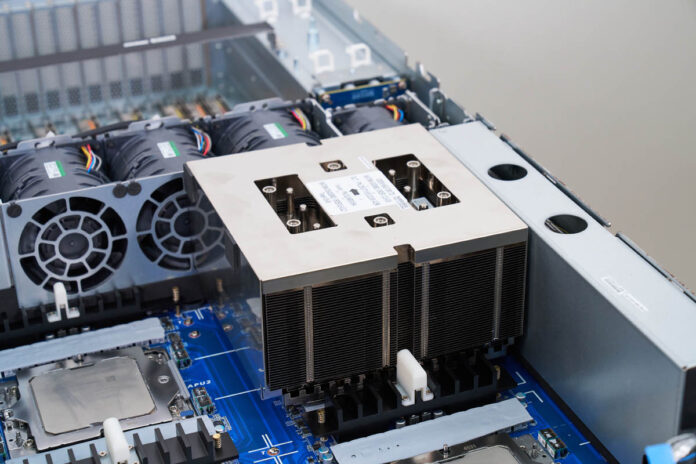

With the actual working system, there is a bit more around the sockets.

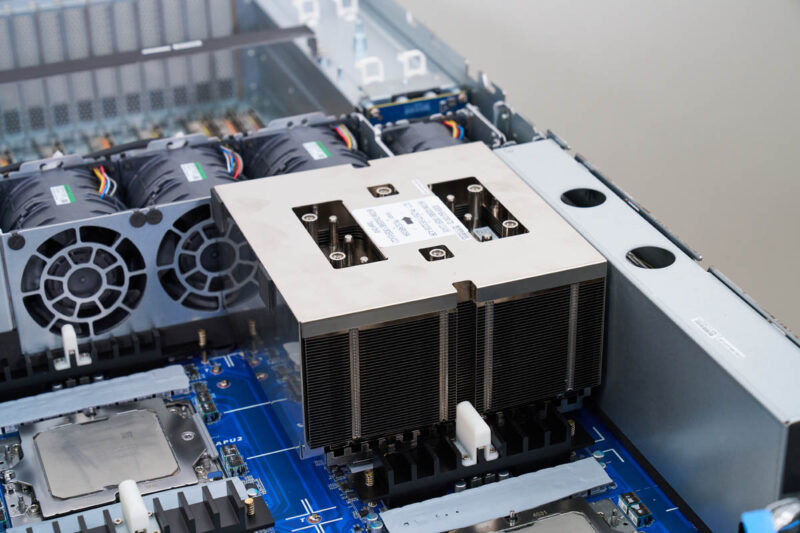

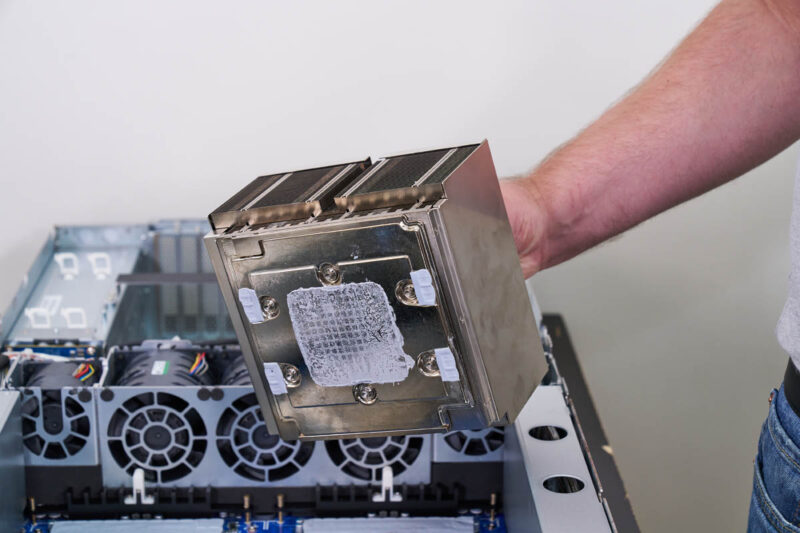

The heatsink is so big that the tiny socket below is completely covered, as is some surrounding power delivery components. As a result, the six screws at the top would be hard to align. Gigabyte’s solution is to put guides on the front and back of the heatsink and between the sockets to ensure the heatsink is lowered into place properly.

The top area also has finger holes to help you align it and put the heatsink in place. It is a lot of metal, so it is quite heavy when you are trying to align it and not ding the motherboard. By my third installation, it was very intuitive.

The APU side of the heatsink shows that it is cooling not just the MI300A, but also the power components around the socket. You can also see that the six screws are fairly centered in the overall heastink. For some sense of scale, inside those six screws is roughly the area of an AMD EPYC 9004/ 9005 series processor.

An AMD Instinct MI300A has a TDP of around 550W (peak is over 700W) so it is not a bad proxy for next-generation server CPUs. While GPU/ AI accelerator TDP is heading to 3kW over the course of several generations, CPUs are set to land in a 500W-600W band for the coming generations.

Final Words

We captured a ton of data and dozens of photos with the Gigabyte G383-R80-AAP1 while we were there, so hopefully, we will have a review over the next few weeks. This platform is really unique since it is designed for those who are not deploying a fully liquid cooled HPE Cray EX supercomputer like El Capitan. Instead, this is an air cooled 3U server. Still, the size of the heatsink was just incredible to (be)hold.

I am really curious if AMD is combining heat pipes and a vapor chamber there. The recent tear down of the RTX 5090 Founder’s Edition thermal solution by Gamer’s Nexus had a very impressive thermal solution which combined them for greater thermal efficiency. Doing this at the cooling block level for these servers would seemingly permit air cooling to be viable for a little bit longer with increasing power requirements at the socket level (ie able to cool 700 to 800 W per socket). Systems with 1000 W or more per socket will remain the domain of liquid cooling.

Time to mandate liquid cooling for chips with a TDP in excess of say, 250W sold in say, 2028 and beyond? That would certainly put the cat among the pigeons.

Won’t happen in the US but maybe in the EU, Japan or other places.